Apple Detection in Orchards

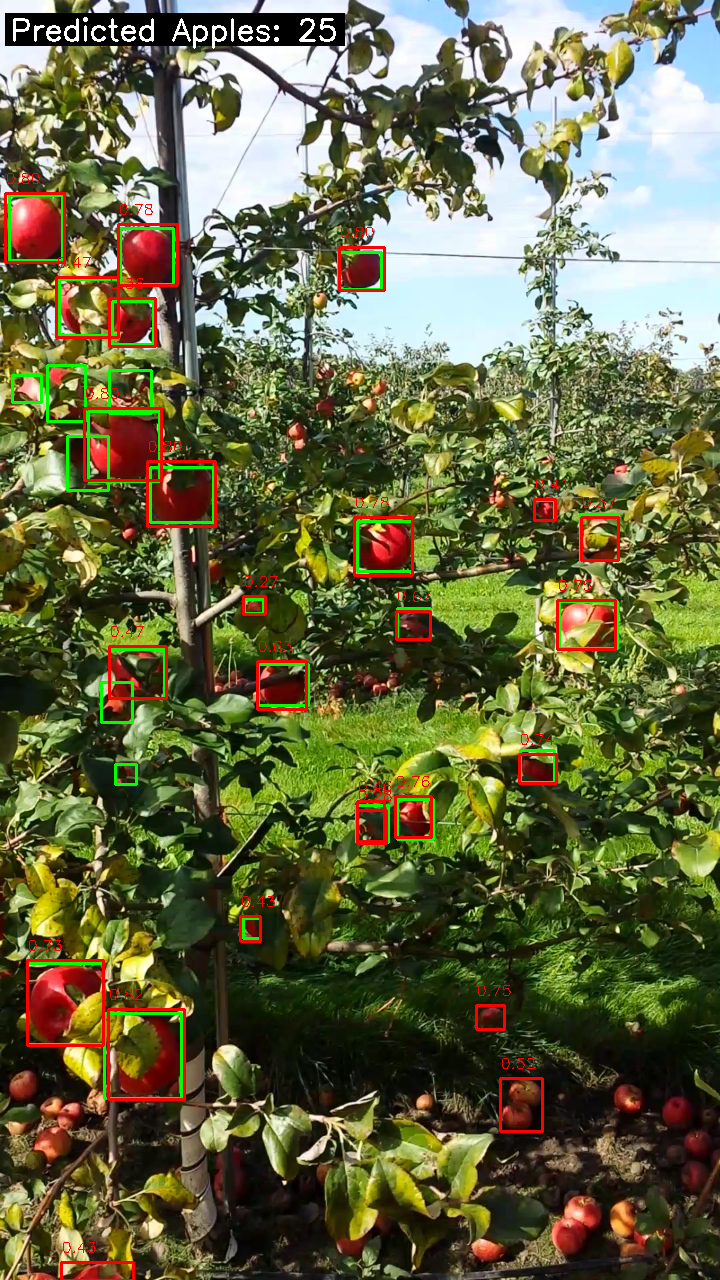

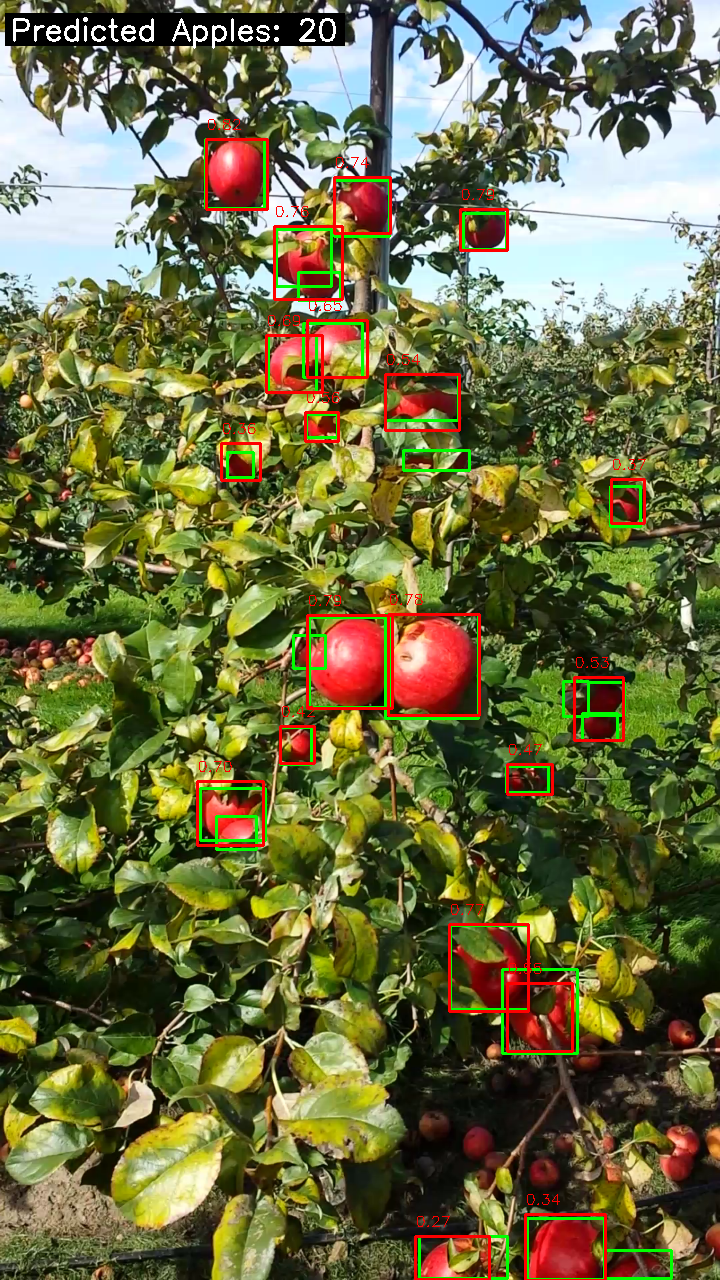

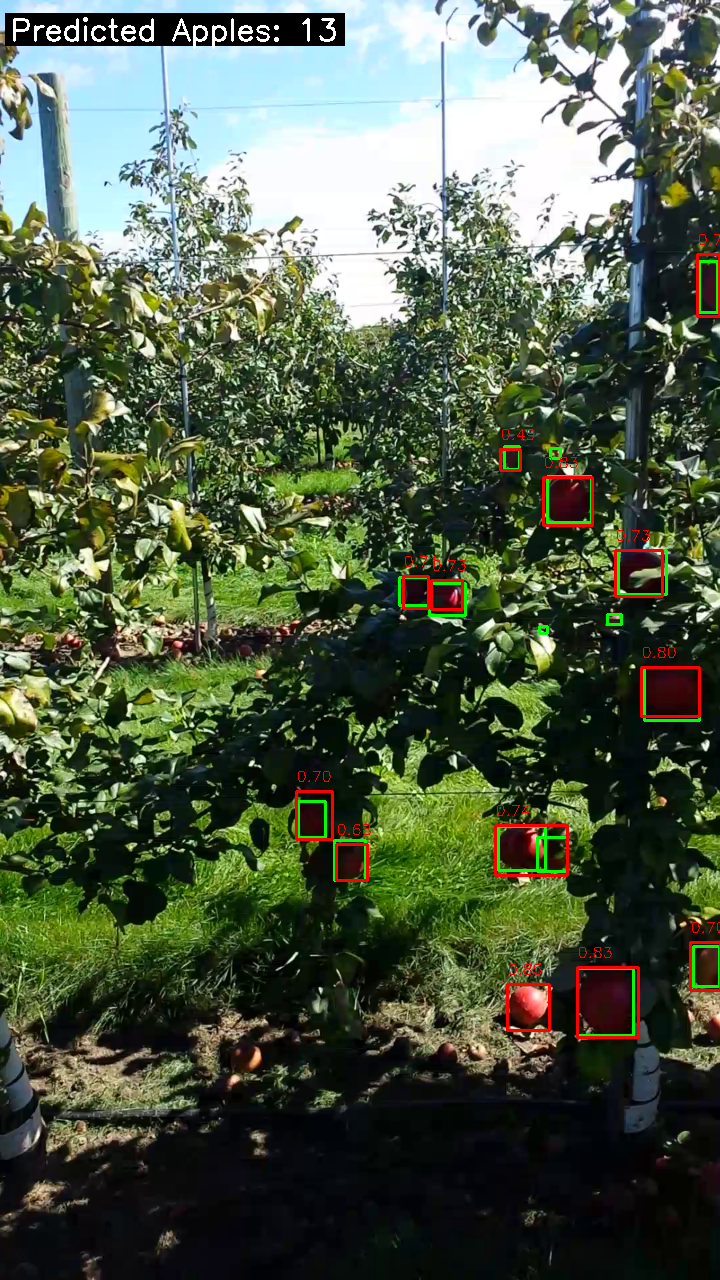

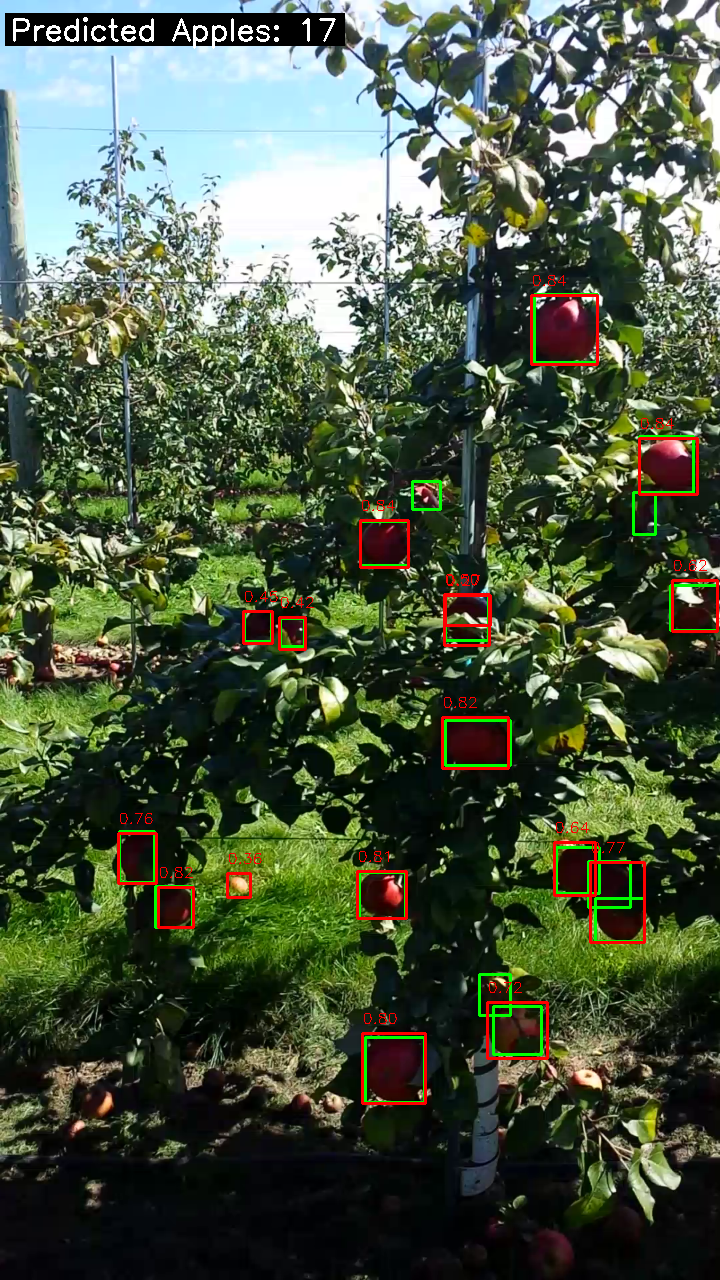

This project was implemented using models like YOLO and FRCNN to address challenges in agricultural automation by enabling efficient and precise fruit detection.

What is YOLO (You Only Look Once)?

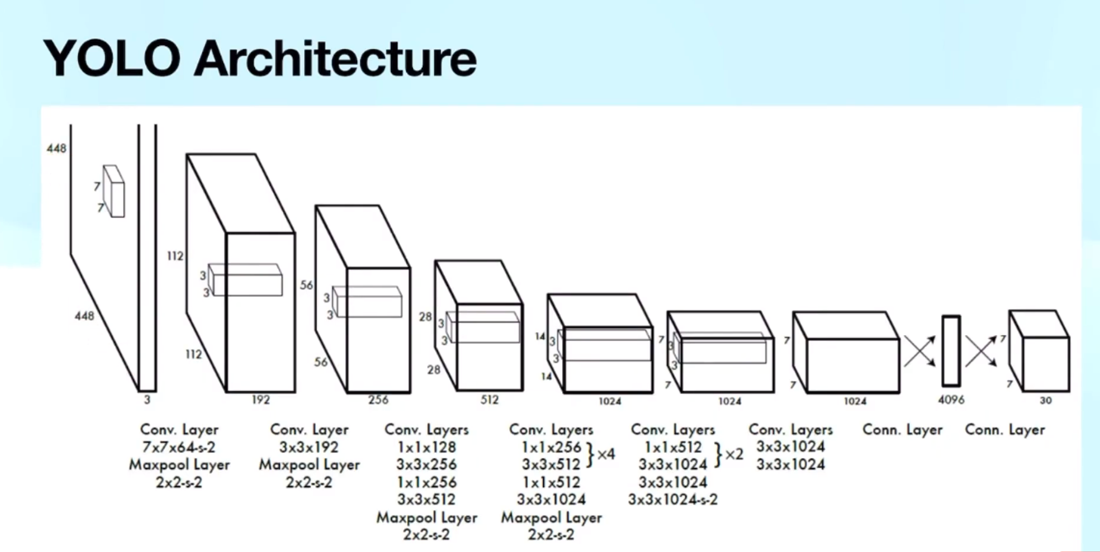

YOLO is a state-of-the-art real-time object detection algorithm. It processes the entire image in one go and predicts bounding boxes and class probabilities directly from the image pixels. YOLO is fast and efficient, making it suitable for applications like real-time fruit detection in agriculture.

- Single-stage detector that provides high-speed predictions.

- Divides the image into grids and predicts bounding boxes for each grid cell.

- Achieves great balance between accuracy and speed.

What is FRCNN (Faster R-CNN)?

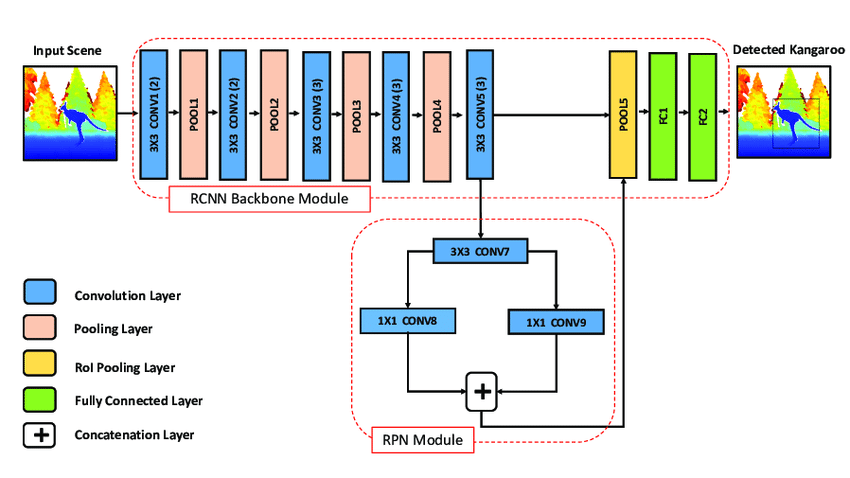

Faster R-CNN is a two-stage object detection model that improves upon previous versions like R-CNN and Fast R-CNN. It uses a Region Proposal Network (RPN) to generate regions of interest, significantly improving detection speed while maintaining accuracy.

- Two-stage detector for higher accuracy.

- Uses a Region Proposal Network (RPN) to generate candidate object proposals.

- Highly accurate but slower compared to YOLO.

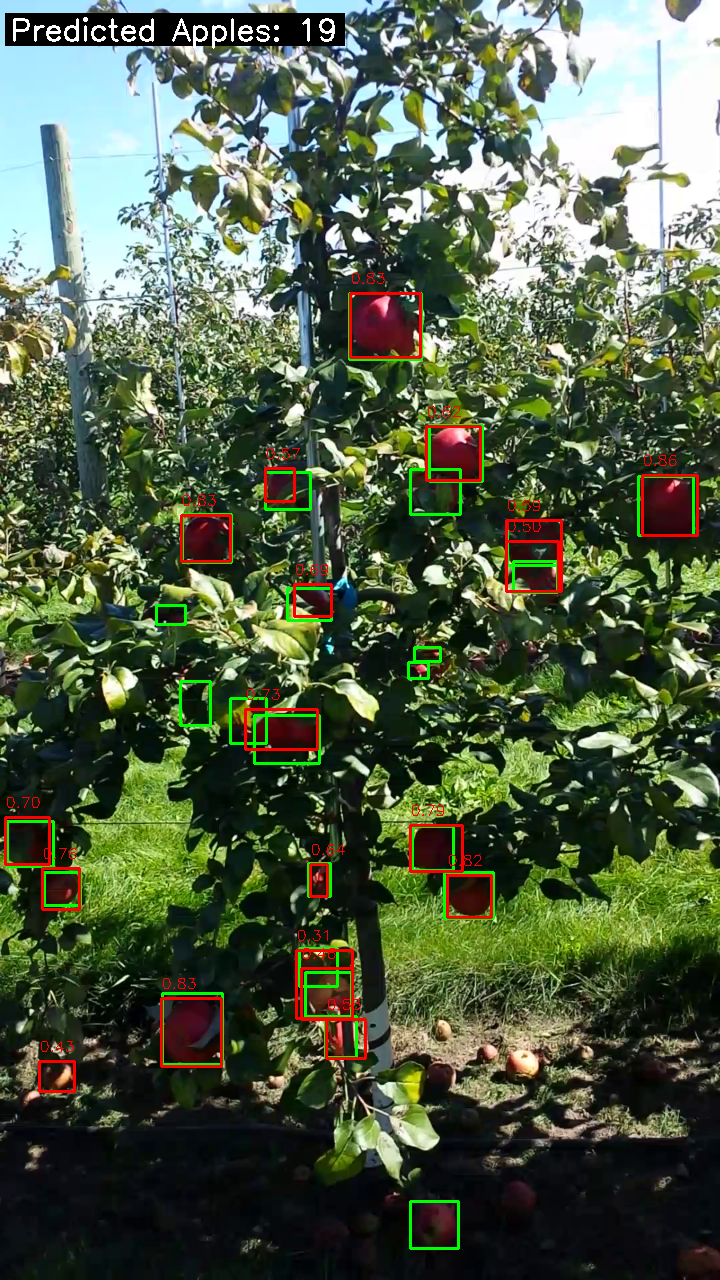

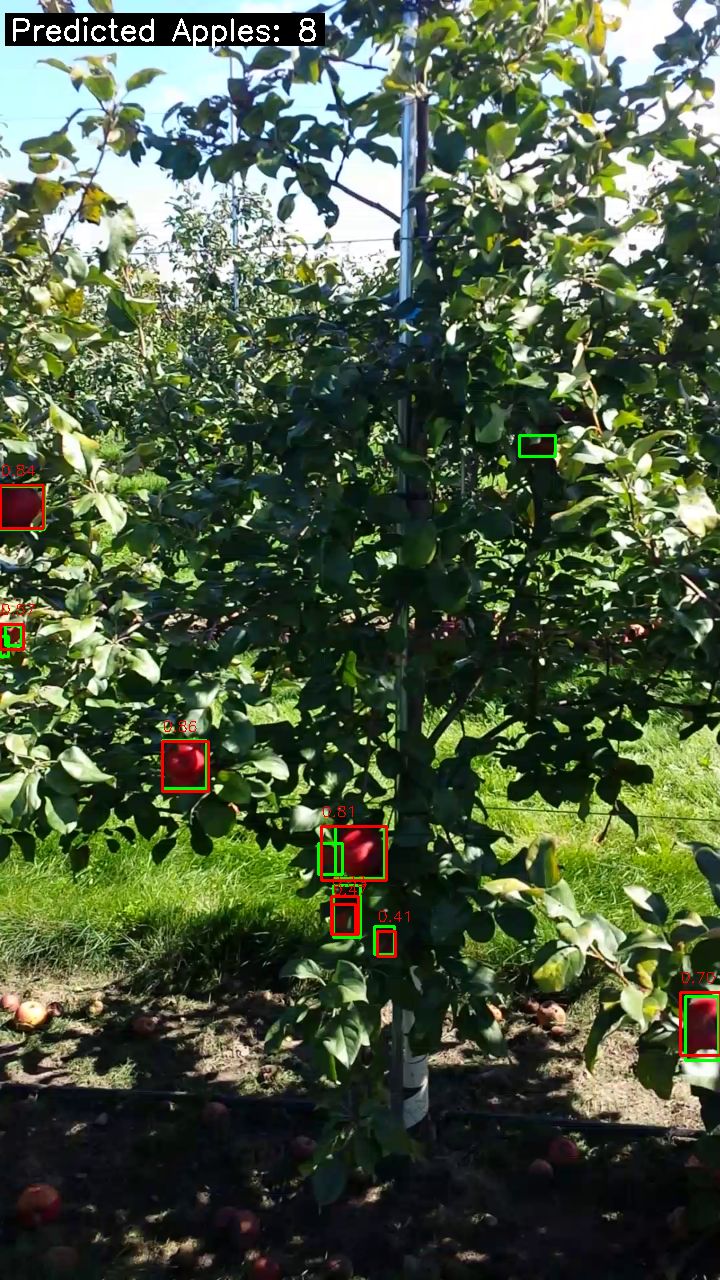

The following asre the results that were extracted fot the data. The Lower accuracy is because of the dataset used and its gbround truth. We used a dataset with no ground truth bounding boxews for the apples fallen on the floor. In response to that, we detected many false positives and hence the precision is reduced.

| Average T+ | Average F+ | Average F- | mAP | mAR | Average Inference Time |

|---|---|---|---|---|---|

| 21.85 | 8.98 | 17.71 | 0.71 | 0.54 | 2.5 ms |